I chose Cowrie as the key component of my unwanted traffic detection infrastructure.

Cowrie is a superb medium/high interaction honeypot designed to log brute-force attempts and shell interactions launched by attackers over both SSH and Telnet. Cowrie

is very popular among both researchers and enthusiasts due to an optimal combination of rich capabilities and ease of use. It is open-source and is backed by an

active community led by Michel Oosterhof, the project's maintainer, creator, and main developer.

1. Choose the honeypot host

You need to start by choosing a Linux system where to install the honeypot. Since Cowrie is very efficient in its resource consumption, I opted for a tiny

Raspberry Pi 400 computer as the Cowrie host.

2. Install the system dependencies

Install the system dependencies on the Cowrie host:

$ sudo apt-get install git python3-virtualenv libssl-dev libffi-dev build-essential libpython3-dev python3-minimal authbind virtualenv

3. Create a user account

Installing a user without a password is not an absolute requirement, but it is recommended by the Cowrie authors:

$ sudo adduser --disabled-password cowrie

Adding user 'cowrie' ...

Adding new group 'cowrie' (1002) ...

Adding new user 'cowrie' (1002) with group 'cowrie' ...

Changing the user information for cowrie

Enter the new value, or press ENTER for the default

Full Name []:

Room Number []:

Work Phone []:

Home Phone []:

Other []:

Is the information correct? [Y/n]

$ sudo su - cowrie

4. Get the Cowrie code

Clone the cowrie project from GitHub:

$ git clone http://github.com/cowrie/cowrie

Cloning into 'cowrie'...

remote: Counting objects: 2965, done.

remote: Compressing objects: 100% (1025/1025), done.

remote: Total 2965 (delta 1908), reused 2962 (delta 1905), pack-reused 0

Receiving objects: 100% (2965/2965), 3.41 MiB | 2.57 MiB/s, done.

Resolving deltas: 100% (1908/1908), done.

Checking connectivity... done.

$ cd cowrie

5. Set up a Python virtual environment

Technically speaking, this step is not needed, but it is highly recommended to ensure that package updates on the Cowrie host system will not cause

incompatibilities with the honeypot operation:

$ pwd

/home/cowrie/cowrie

$ python -m venv cowrie-env

New python executable in ./cowrie/cowrie-env/bin/python

Installing setuptools, pip, wheel...done.

After you install the virtual environment, activate it and install required packages:

$ source cowrie-env/bin/activate

(cowrie-env) $ python -m pip install --upgrade pip

(cowrie-env) $ python -m pip install --upgrade -r requirements.txt

6. Configure Cowrie

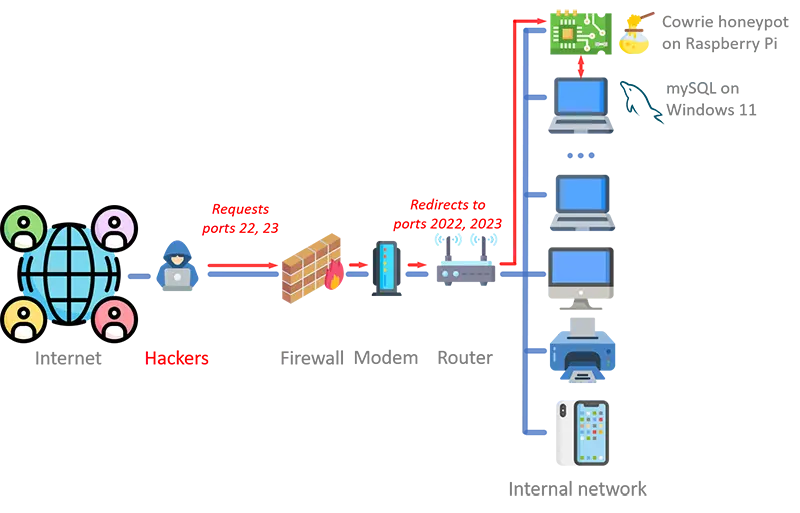

The Cowrie configuration is stored in the cowrie/etc/cowrie.cfg file. To run the honeypot with a standard configuration, there is

no need to change anything. By default, Cowrie accepts traffic over SSH. I wanted the honeypot to also accept traffic over Telnet and change the default ports 22 and 23,

so I modified the configuration file as follows:

[telnet]

enabled = true

...

[proxy]

backend_ssh_port = 2022

backend_telnet_port = 2023

I also wanted to change the default user configurations and the list of credentials accepted to login to the remote shell. These changes are made by modifing the

cowrie/etc/userdb.txt file. Each line in the file consists of three fields separated by the : character,

where:

- Field #1 is the username.

- Field #2 is currently unused and set to

x.

- Field #3 is the regular expression that specifies the list of passwords accepted by the user.

As an example, the following settings configure a username

admin that accepts all passwords except 1) only numeric characters, 2) the

case-sensitive string

admin, and 3) the case-insensitive string

honeypot:

admin:x:!admin

admin:x:!/^[0-9]+$/

admin:x:!/honeypot/i

admin:x:*

7. Customize Cowrie

Optionally, you can change the look-and-feel of the Cowrie interface to make it look more realistic. A number of files allow you to do that:

-

In

cowrie/etc/cowrie.cfg, you can change, for example, the hostname displayed by the shell prompt, the user prompt, the Telnet

username and password prompts, the response from the uname command, the SSH version printed by ssh -V,

etc.

hostname = appsrv02

...

prompt = root>

...

telnet_username_prompt_regex = (\n|^)ubuntu login: .*

telnet_password_prompt_regex = .*Password: .*

...

kernel_version = 3.2.0-4-amd64

kernel_build_string = #1 SMP Debian 3.2.68-1+deb7u1

hardware_platform = x86_64

operating_system = GNU/Linux

...

ssh_version = OpenSSH_7.9p1, OpenSSL 1.1.1a 20 Nov 2018

- In

cowrie/honeyfs/etc/issue, you can change the pre-login banner.

- In

cowrie/honeyfs/etc/motd, you can change the post-login message.

- In

cowrie/honeyfs/proc/cpuinfo, you can change the simulated CPU make-up of the system (e.g., number and type of processors).

- In

cowrie/honeyfs/proc/meminfo, you can change the simulated system's memory allocation and usage.

- In

cowrie/honeyfs/proc/version, you can change the Linux kernel and gcc versions.

8. Forward listening ports

As we saw above, I configured Cowrie to accept SSH traffic over port 2022 and Telnet traffic over port 2023. In order to preserve the fidelity of the decoy,

I opened ports 22 and 23 on the router and forward their traffic to ports 2022 and 2023, respectively, on the system hosting Cowrie.

9. Start Cowrie

Start the honeypot by calling the cowrie/bin/cowrie executable that is part of the Cowrie distribution. An existing virtual

environment is preserved if activated, otherwise Cowrie will attempt to load the environment called cowrie-env that we created earlier:

bin/cowrie start

Activating virtualenv "cowrie-env"

Starting cowrie with extra arguments [] ...

I opted for MySQL running on Windows as the warehouse of my Cowrie-generated data because it's a powerful and mature technology, offers nice API-based data querying

and manipulation functionality, can be installed locally, and — best of all — is open-source and free. Originally, I went with

a Splunk Enterprise solution. Although proprietary, Splunk is free if you keep your data volume under 500 MB per day. That worked fine for about six months, but then

one day the honeypot experienced a spike in traffic of 2.5 TB, which allowed me to see the ugly side of the freemium business model and Splunk's heavy-handed

approach to user management: "pay up or be blocked for a month." As both options were unacceptable to me, I ditched Splunk, moved to mySQL, and never looked back. If

you are interested, my old Splunk-based installation and configuration notes are available here.

Cowrie offers a set of instructions to send its data to a MySQL database that are

significantly simpler than the process described on this page. Those instructions are a great starting point. Ultimately, I decided to go with a custom configuration to go beyond the

bare-bones capabilities offered out-of-the-box by Cowrie. Specifically, I needed the ability to import a modified version of the Cowrie feed and a way to analyze the data

through an API. I also needed to run MySQL on a separate Windows host. The instructions below provide that functionality.

2. Wrangle the Data

You can use the Cowrie data stream as it comes from the honeypot in the form of daily JSON files. This is essentially an event-based feed, where every session or

unwanted interaction with the honeypot is broken down into a series of events that constitute an attack: E.g., connect, attempt to login, execute commands on the

shell, create or upload/download files to the honeypot, disconnect, etc.

I opted for an alternative view of the unwanted traffic based on sessions, not events. To do this, I translated the default Cowrie feed into a new one where the main

unit of information, which will later be stored as a row in a SQL database, is the session, not the event. This required merging all the events corresponding to the

same session into a single "row". This work was part of the data normalization I did when I was using Splunk as my data repository; you can find the details

here. As a reminder, data normalization is the process used to reorganize or ‘massage’ the data

so that it’s easier, faster to work with it. It involves reducing/eliminating data redundancy and ensuring that the data dependencies are implemented in a way that takes

into account the constraints of the underlying database that holds the data. This allows the data to be queried and analyzed more easily. Splunk does not use a

conventional database, so the normalization that resulted in the new session-based feed was all that was needed. But MySQL, our new data store solution, utilizes

a SQL database. For SQL data, normalization often requires splitting large tables into smaller ones and linking them through relationships. And that's exactly what we

had to do. To understand why, let's look at a key aspect of our new session-based feed:

-

Some of the fields in a session are singletons with just one value:

| Field in Original Feed |

Field in New Feed |

Description |

| |

dst_asn |

New - ASN of target IP address (i.e., that of the honeypot) provided by MaxMind |

| |

dst_country |

New - Country of target IP address provided by MaxMind |

| dst_ip |

dst_ip |

Target IP address |

| dst_port |

dst_port |

Target port |

| duration |

duration |

Session duration in seconds |

| input |

commands |

Sequence of commands executed on the shell |

| protocol |

protocol |

Network protocol on which unwanted traffic was sent |

| sensor |

sensor |

Name of the honeypot |

| session |

session |

Unique session identifier |

| |

src_asn |

New - ASN of source IP address (i.e., that of the attacker) provided by MaxMind |

| |

src_country |

New - Country of source IP address provided by MaxMind |

| src_ip |

src_ip |

Source IP address |

| src_port |

src_port |

Source port |

| timestamp |

timestamp |

Time of the start of the unwanted interaction |

| |

traaffic_type |

New - Is the unwanted traffic a port scan or an attack? |

-

Some of the fields are lists with multiple values:

| Field in Original Feed |

Field in New Feed |

Description |

| (login) success|failed |

attempt_logins |

List of login statuses |

| password |

attempt_passwords |

List of passwords attempted |

| username |

attempt_usenames |

List of usernames attempted |

| |

attempt_credentials |

New - List of username\0password credentials attempted |

| hash |

malware_hashes |

List of malware hashes |

| filename, outfile |

malware_sites |

List of malware URLs |

| |

malware_types |

New - List of malware sample types: upload|download|redirection |

| (tcpip) dst_ip |

tcpip_dst_ips |

List of TCP/IP destination IP addresses |

| (tcpip) dst_port |

tcpip_dst_ports |

List of TCP/IP destination ports |

| (tcpip) src_ip |

tcpip_src_ips |

List of TCP/IP source IP addresses |

| (tcpip) src_port |

tcpip_src_ports |

List of TCP/IP source ports |

| ttylog |

ttylog_names |

List of TTY log files capturing attack interactions |

| sha256, url |

vtlookup_files |

List of VirusTotal scanned hashes or URLs |

| is_new |

vtlookup_new |

List of known or unknown ("is new") status of the suspicious samples scanned by VirusTotal |

| positives |

vtlookup_positives |

List of the number of VirusTotal providers who classified the samples as malicious |

| total |

vtlookup_scans |

List of number of VirusTotal provides who scanned the suspicious samples |

Single-valued fields are easy to handle and require no additional processing. They are implemented directly as columns in a SQL database table. But the SQL rules

prohibit/greatly restrict the use of lists in table columns. The solution to this challenge is to do SQL normalization of the data in the Cowrie feed as follows:

-

Create one primary table sessions made of all the single-valued fields/columns.

-

Create one separate secondary table for each of the list-value fields. These tables hold the individual values of their respective lists lists. The secondary

tables link to the primary table by means of a foreign key in the secondary tables that references the

session column in the

primary sessions table. In all, I needed five secondary tables:

-

attempts to keep the values of

attempt_logins, attempt_passwords,

attemtp_usernames, and attempt_credentials

-

malware to keep the values of

malware_hashes, malware_sites, and

malware_types

-

tcpip to keep the values of

tcpip_dst_ips, tcpip_dst_ports,

tcpip_src_ips, and tcpip_src_ports

-

ttylogs to keep the values of

ttylog_names

-

vtlookups to keep the values of

vtlookup_files, vtlookup_new,

vtlookup_positives, and vtlookup_scans

Notice that the commands field is a bit of an anomaly. Technically speaking, it's a comma-separated list of Linux commands. But,

as I use it as a single entity, I'm currently treating it as a single-valued long string field (i.e., as a column in the primary table). I may change this arrangement

at a later time and implement the commands field as a separate secondary table by breaking it down to its individual Linux commands.

Finally, I decided to leave out the new feed a few fields in the original data stream that add limited value to my research.

2. Install MySQL

I installed the Windows version of MySQL from the MySQL Community Downloads area. At the time

of my install, the latest available version of the installer was 8.0.36. Installation is straightforward and self-explanatory:

- Start the MySQL installer for Windows.

- Choose the Custom setup type to be able to choose the MySQL products you want to install.

- In addition to the core MySQL Server, make sure that you install MySQL Router if you want to have API access to your data (more on this later). I

chose to also install MySQL Shell and MySQL Workbench to have user interfaces to configure the database and manipulate the data. Workbench, Shell and

Router are available under Applications in the installation dialog. I did not install the MySQL Connectors (ODBC, C++ and Python).

- For each of the selected products, multiple versions are available. I only installed the latest (8.0.36).

- After the installer downloads and installs the MySQL products you selected, it will ask you to configure MySQL Server and MySQL Router.

- The first configuration step is the selection of the server type and networking parameters. Three server configuration types are available, each with increasing memory

requirements: Development Computer, Server Computer, and Dedicated Computer. I originally opted for Development Computer but, when I later

experienced sluggish performance of my database, I changed to Server Computer. That made a big difference.

- I accepted the networking settings at their default values.

- After the server and networking configuration, you need to set your authentication method. Go with the recommended strong password encryption and set your MySQL root password.

- Next, configure the Windows service and the server file permissions by accepting the defaults.

- Finally, configure MySQL Router. Again, accept the default settings.

After the above installation and configuration is done, the Windows MySQL service will start, and both the

MySQL Shell and

MySQL Workbench will automatically launch.

3. Create a MySQL database for Cowrie

Next, you need to create a MySQL database to host your Cowrie data. The steps are as follows:

- If the MySQL Workbench application is not running, start it.

- Under MySQL Connections, you should see a default instance with the name "Local instance MySQL80". Click on it and enter you MySQL sever root password.

- On the left hand-side menu, select the Schemas tab.

- Click on the Create a new schema in the connected server icon on the top taskbar; the icon looks like a database with a superimposed plus sign.

- Enter a name for the new schema (I named mine

cowrie_normalized) and click the Apply button (twice), then Finish.

- Now, you need to create your database tables:

-

Double-click the newly-created schema and click on the Create a new table in the active schema in connected server icon; the icon looks like a table

with a plus sign.

- Enter a name for the new table; I entered

sessions as the name of my primary table.

-

Repeat the two steps above to create all other tables in your schema. In mine, and as we saw earlier, I make use of 5 additional secondary tables:

credentials, malware, tcpip,

ttylogs, and vtlookups.

-

Then, you need to design your tables by specifying the names and types of your columns (fields). The configuration of my tables looks something like this:

--

-- Structure for primary table `sessions`

--

`sessoin_id` int NOT NULL, UNIQUE, PRIMARY, AUTO_INCREMENT

`session` varchar(12) NOT NULL, UNIQUE

`commands` longtext

`dst_ip` varchar(15) NOT NULL

`dst_port` int NOT NULL

`dst_asn` int DEFAULT NUL

`dst_country` varchar(45) DEFAULT NUL

`duration` float NOT NULL

`protocol` varchar(6) NOT NULL

`sensor` varchar(48) NOT NULL

`src_ip` varchar(15) NOT NULL

`src_port` int NOT NULL

`src_asn` int NOT NULL

`src_country` varchar(45) NOT NULL

`timestamp` timestamp(6) NOT NULL, UNIQUE

`traffic_type` varchar(6) NOT NULL

--

-- Structure for secondary table `attempts`

--

`attempt_credentials` varchar(513) NOT NULL

`attempt_id` int NOT NULL, UNIQUE, PRIMARY, AUTO_INCREMENT

`attempt_login` varchar(5) NOT NULL

`attempt_password` varchar(256) DEFAULT NULL

`attempt_session` varchar(12) NOT NULL, UNIQUE, FOREIGN KEY REFERENCES `sessions` (`session`)

`attempt_username` varchar(256) NOT NULL

--

-- Structure for secondary table `malware`

--

`malware_hash` varchar(64) NOT NULL

`malware_id` int NOT NULL, UNIQUE, PRIMARY, AUTO_INCREMENT

`malware_session` varchar(12) NOT NULL, FOREIGN KEY REFERENCES `sessions` (`session`)

`malware_site` varchar(45) DEFAULT NULL

`malware_type` varchar(8) NOT NULL

--

-- Structure for secondary table `tcpip`

--

`tcpip_dst_ip` varchar(256) DEFAULT NULL

`tcpip_dst_port` int DEFAULT NULL

`tcpip_id` int NOT NULL, UNIQUE, PRIMARY, AUTO_INCREMENT

`tcpip_session` varchar(12) NOT NULL, FOREIGN KEY REFERENCES `sessions` (`session`)

`tcpip_src_ip` varchar(256) DEFAULT NULL

`tcpip_src_port` int DEFAULT NULL

--

-- Structure for secondary table `ttylogs`

--

`ttylog_id` int NOT NULL, UNIQUE, PRIMARY, AUTO_INCREMENT

`ttylog_name` varchar(96) NOT NULL

`ttylog_session` varchar(12) NOT NULL, FOREIGN KEY REFERENCES `sessions` (`session`)

--

-- Structure for secondary table `vtlookups`

--

`vtlookup_file` varchar(64) NOT NULL

`vtlookup_id` int NOT NULL, UNIQUE, PRIMARY, AUTO_INCREMENT

`vtlookup_new` varchar(5) NOT NULL

`vtlookup_positives` int NOT NULL

`vtlookup_session` varchar(12) NOT NULL, FOREIGN KEY REFERENCES `sessions` (`session`)

`vtlookup_scans` int NOT NULL

As you can see, there's one column for each of the fields in my session-based Cowrie feed. In addition to those, I created an *_id

column in each table to serve as the primary key. I made it auto-increment and, as we'll see later, added some logic to make sure that there are no gaps in the

values.

- After you are satisfied with your table layout, click the Apply button (twice), then Finish.

Congratulations! You now have a MySQL database ready to ingest your Cowrie data.

4. Install Apache, PHP and phpMyAdmin

This step is not needed but if, like me, you want to have the ability to view your MySQL-hosted Cowrie data through a web interface and are used to configure MySQL with

the good old phpMyAdmin, you may want to consider it. I find that the easiest way to install phpMyAdmin on Windows is by using an XAMPP (Cross Apache

MariaDB PHP Perl) distribution by Apache Friends. You can download their Windows installer from their

website. At the time of my install, the latest available version of the Windows installer was 8.2.12. Installation is easy:

- Download and launch the Windows installer from the XAMPP downloads page.

- If you get a warning about Windows User Account Control (UAC) possiby interfering with the XAMPP installation, click OK to dismiss it.

-

Select the components you want to install. I really don't have much use for Mercury Mail Server, Tomcat, Perl, Webalizer, and

Fake Sendmail, and I already have MySQL (from step 1 above) and FileZilla FTP Server. Apache and PHP are required and

always installed, so I just selected phpMyAdmin.

- Accept all other installation defaults.

- If you get a Windows Defender Firewall dialog notifying you that the Apache HTTP server has been blocked, click Allow access to proceed.

- Click Finish to complete the installation.

At this point, Apache, PHP and phpMyAdmin, together with the useful

XAMP Control Panel, are installed on your system. We now need to tie together the earlier

MySQL installation with the recent phpMyAdmin installation:

-

Open the phpMyAdmin configuration file (by default, it should be

C:\xampp\phpMyAdmin\config.inc.php) and change the authentication

settings to reflect your MySQL configuration:

/* Authentication type and info */

$cfg['Servers'][$i]['auth_type'] = 'config';

$cfg['Servers'][$i]['user'] = 'root';

$cfg['Servers'][$i]['password'] = 'YOUR_MYSQL_SERVER_ROOT_PASSWORD_HERE';

$cfg['Servers'][$i]['extension'] = 'mysqli';

$cfg['Servers'][$i]['AllowNoPassword'] = true;

$cfg['Lang'] = '';

/* User for advanced features */

/* $cfg['Servers'][$i]['controluser'] = 'pma'; */

/* $cfg['Servers'][$i]['controlpass'] = ''; */

- Add the following lines to the phpMyAdin configuration file and then save it:

/* Gets rid of the following error messsage: The phpMyAdmin configuration storage is not completely configured, some extended features have been deactivated. */

$cfg['PmaNoRelation_DisableWarning'] = true;

-

Launch the XAMPP Control Panel. If you followed the steps above, you should see that MySQL, FileZilla, Mercury, and Tomcat

are grayed out (i.e., we did not install them); Apache is not.

- Click the Start button to start Apache.

-

You can now open your favorite web browser and navigate to either

localhost or 127.0.0.1.

That should open the XAMPP landing page on your local Apache server.

-

Click on the phpMyAdmin link at the top of the page. You should see the

cowrie schema and

normalized_traffic table we created in step 2 above.

5. Import the Cowrie data into the MySQL database

Instead of a more elaborate message broker-based architecture, I implemented a simpler system taking advantage of the fact that Cowrie saves the traffic it collects

in daily JSON files. I wrote a program that runs every day under the Windows Task Scheduler with the following high-level logic:

- Get the list of events recorded by Cowrie from the daily JSON file.

- Translate the native event-based Cowrie feed into a new session-based feed as described earlier.

-

Convert the list of sessions from the session-based JSON to CSV and save it as six separate files, one per table:

sessions.csv,

attempts.csv, malware.csv, tcpip.csv,

ttylogs.csv, and vtscans.csv.

-

Create scripts of SQL commands to do the data import. For example, the script

sessions.sql that imports the data from

sessions.csv into the sessions table looks like this:

SET @maxid = (SELECT COALESCE(MAX(session_id), 0) + 1 FROM cowrie.sessions);

SET @sql = CONCAT('ALTER TABLE cowrie.sessions AUTO_INCREMENT = ', @maxid);

PREPARE st FROM @sql;

EXECUTE st;

LOAD DATA INFILE 'sessions.csv'

IGNORE INTO TABLE cowrie.sessions

FIELDS TERMINATED BY ','

ENCLOSED BY '"'

ESCAPED BY ''

LINES TERMINATED BY '\n'

IGNORE 1 LINES

(session,commands,dst_ip,dst_port,dst_country,dst_asn,duration,protocol,sensor,src_ip,src_port,src_country,src_asn,timestamp,type);

Let's unpack this a a bit. The first four lines get the maximum value of the session_id column in our table, which corresponds to the most

recently entered row (session). That value, incremented by 1, is then used as the next auto-increment value. If we don't explicitely set it, it's likely that MySQL

will use an auto-increment value for the daily import data that's higher (by more than one) than the auto-increment value of the last row from the previous day. In

other words, the first four lines of our SQL script ensure that there are no gaps in the id values.

The following SQL instructions import the Cowrie data in CSV format from the sessions.csv file. The

IGNORE command instructs MySQL to continue importing data after finding an error (don't worry, diagnostics will be generated in

that case). Other instructions inform SQL that the characters to separate fields, enclosed field values, and terminate lines are

,, ", and \n, respectively, and that no escaping of special

characters is to be performed.

The IGNORE 1 LINES instruction is provided so that MySQL skips the header row with the names of the coliumns (fields), which are

explicitly listed on the last line.

-

Import the CSV information into the MySQL database by executing the

sessions.sql SQL script from the MySQL Shell:

mysqlsh -uroot -h localhost --sql < sessions.sql

-

Repeat the steps above to ingest the data in the other tables:

mysqlsh -uroot -h localhost --sql < attempts.sql

mysqlsh -uroot -h localhost --sql < malware.sql

mysqlsh -uroot -h localhost --sql < tcpip.sql

mysqlsh -uroot -h localhost --sql < ttylogs.sql

mysqlsh -uroot -h localhost --sql < vtlookups.sql

Hooray! We now have our Cowrie data — nicely normalized into sessions — available in a MySQL database. Take a few minutes to celebrate.

6. Configure the MySQL REST Service (MRS)

We now have a MySQL database that can be accessed through three different interfaces: MySQL Shell, MySQL Workbench, and browser-based phpMyAdmin.

In this next step, we'll add a fourth one in the form of a Visual Studio Code extension. Although technically speaking this is not required, it will significantly simplify the process of

setting up access to the Cowrie data on MySQL through a REST API. For this, we'll use the MySQL REST Service (MRS), a technology that enables fast and secure HTTPS access for your MySQL

data. Implemented as a MySQL Router feature, MRS provides the ability to publish RESTful web services for interacting with the data stored in MySQL solutions. I use it to programmatically

extract the Cowrie data stored in MySQL as part of my analytics workflow. Although MRS can be configured directly from the MySQL Shell, it's much easier to use the Visual Studio Code

extension. I'm assuming that you are familiar with Visual Studio Code and use it for some or all your code editing activities,

so we won't go over its installation, which is straightforward.

We are done! Our data should now be available at the

https://localhost:8443/honeypot/v1 URI through the following endpoints.

/sessions/attempts/malware/tcpip/ttylogs/vtlookups

7. Verify the MRS Core API

As the final step, we are going to test that the Cowrie data we imported into our MySQL database is indeed available through the endpoints. From

Visual Studio Code, right-click DATABASE CONNECTIONS\Cowrie\MySQL REST Service\honeypot\v1\sessions and select

Open REST Object Request Path in Web Browser. A new tab should open on your browser displaying the first 25 Cowrie sessions/attacks in JSON format.

For this to work, make sure that a MySQL Router instance is running. You can start MySQL Router from a command line terminal with the aide of the

following Bash script:

#!/bin/bash

declare +i -r MSRCONF="c:/Users/YOUR_WINDOWS_USER_ID/AppData/Roaming/MySQL/mysqlsh-gui/plugin_data/mrs_plugin/router_configs/1/mysqlrouter"

declare +i -r MSRPATH="c:/Users/YOUR_WINDOWS_USER_ID/.vscode/extensions/oracle.mysql-shell-for-vs-code-1.14.2-win32-x64/router"

declare +i pid=""

export PATH="${PATH}:${MSRPATH}/lib"

export ROUTER_PID="${MSRCONF}/mysqlrouter.pid"

pid=`ps -W | grep mysqlrouter | awk '{print $1}'`

if [ ! "${pid}" = "" ]

then

echo "MySQL Router is already running with PID = ${pid}"

exit 0

else

"${MSRPATH}/bin/mysqlrouter.exe" -c "${MSRCONF}/mysqlrouter.conf" > /dev/null 2>&1 &

disown %-

pid=`ps -W | grep mysqlrouter | awk '{print $1}'`

if [ ! "${pid}" = "" ]

then

echo "MySQL Router is running with PID = ${pid}"

exit 0

else

echo "Error: MySQL Router could not be started"

exit 1

fi

fi

For completeness, you can stop

MySQL Router with the following script:

#!/bin/bash

declare +i -r MSRCONF="c:/Users/YOUR_WINDOWS_USER_ID/AppData/Roaming/MySQL/mysqlsh-gui/plugin_data/mrs_plugin/router_configs/1/mysqlrouter"

declare +i pid=""

pid=`ps -W | grep mysqlrouter | awk '{print $1}'`

if [ ! "${pid}" = "" ]

then

echo "MySQL Router is running with PID = ${pid}"

env kill -f ${pid} > /dev/null 2>&1

pid=`ps -W | grep mysqlrouter | awk '{print $1}'`

if [ "${pid}" = "" ]

then

rm -f ${MSRCONF}/mysqlrouter.pid

echo "MySQL Router is no longer running"

exit 0

else

echo "Error: MySQL Router could not be stopped"

exit 1

fi

else

echo "MySQL Router is not running"

exit 0

fi

We can also check the API using the curl command from a terminal window or shell script. The following are examples of

curl command invocations that extract Cowrie data from the MySQL database using the MySQL REST API:

# Show the first 25 Cowrie sessions

curl -s -k 'https://localhost:8443/honeypot/v1/sessions' | jq

# Show the first 25 scans, server filtering

curl -s -k 'https://localhost:8443/honeypot/v1/sessions' -G --data-urlencode 'q={"type":"scan"}' | jq

# Show the first 25 attacks, server filtering

curl -s -k 'https://localhost:8443/honeypot/v1/sessions' -G --data-urlencode 'q={"type":"attack"}' | jq

# Show the first 25 attacks with successful logins, server filtering

curl -s -k 'https://localhost:8443/honeypot/v1/attempts' -G --data-urlencode 'q={"login":"true"}' | jq

# Show the first 25 attacks with successful logins, client filtering

curl -s -k 'https://localhost:8443/honeypot/v1/sessions' | jq '.items[] | select(.credentials?[]?.login == "true")'

# Show the first 25 attacks with unsuccessful logins, client filtering

curl -s -k 'https://localhost:8443/honeypot/v1/sessions' | jq '.items[] | select(.credentials? | length > 0 and all(.login == "false"))'

# Show sessions 10,001 to 10,500, server filtering

curl -s -k 'https://localhost:8443/honeypot/v1/sessions' -G --data-urlencode 'offset=10000&limit=500' | jq

# Show session # 12,345, server filtering

curl -s -k 'https://localhost:8443/honeypot/v1/sessions' -G --data-urlencode 'q={"id":12345}' | jq

# Show the first 25 sessions that originated from IP addresses operating in Spain, server filtering

curl -s -k 'https://localhost:8443/honeypot/v1/sessions' -G --data-urlencode 'q={"srcCountry":"Spain"}' | jq

# Show the first 25 sessions that originated from IP addresses operating in either Singapore or France, server filtering

curl -s -k 'https://localhost:8443/honeypot/v1/sessions' -G --data-urlencode 'q={"$or":[{"srcCountry":"Singapore"},{"srcCountry":"France"}]}' | jq

And that's all. We covered a lot of ground and should be ready to go. Happy threat hunting!

Reference: The MRS JSON filter grammar

The last example in the previous section shows how to combine filter clauses with a logical operator (in the example, $or). The complete specification

of the JSON filter grammar supported by the MySQL REST Service is as follows:

FilterObject { orderby , asof, wmembers }

orderby

"$orderby": {orderByMembers}

orderByMembers

orderByProperty

orderByProperty , orderByMembers

orderByProperty

columnName : sortingValue

sortingValue

"ASC"

"DESC"

"-1"

"1"

-1

1

asof

"$asof": date

"$asof": "datechars"

"$asof": scn

"$asof": +int

wmembers

wpair

wpair , wmembers

wpair

columnProperty

complexOperatorProperty

columnProperty

columnName : string

columnName : number

columnName : date

columnName : simpleOperatorObject

columnName : complexOperatorObject

columnName : [complexValues]

columnName

"\p{Alpha}[[\p{Alpha}]]([[\p{Alnum}]#$_])*$"

complexOperatorProperty

complexKey : [complexValues]

complexKey : simpleOperatorObject

complexKey

"$and"

"$or"

complexValues

complexValue , complexValues

complexValue

simpleOperatorObject

complexOperatorObject

columnObject

columnObject

{columnProperty}

simpleOperatorObject

{simpleOperatorProperty}

complexOperatorObject

{complexOperatorProperty}

simpleOperatorProperty

"$eq" : string | number | date

"$ne" : string | number | date

"$lt" : number | date

"$lte" : number | date

"$gt" : number | date

"$gte" : number | date

"$instr" : string

"$ninstr" : string

"$like" : string

"$null" : null

"$notnull" : null

"$between" : betweenValue

"$like": string

betweenValue

[null , betweenNotNull]

[betweenNotNull , null]

[betweenRegular , betweenRegular]

betweenNotNull

number

date

betweenRegular

string

number

date

string

JSONString

number

JSONNumber

date

{"$date":"datechars"}

scn

{"$scn": +int}

datechars is an RFC3339 date format in UTC (Z)

JSONString

""

" chars "

chars

char

char chars

char

any-Unicode-character except-"-or-\-or-control-character

\"

\\

\/

\b

\f

\n

\r

\t

\u four-hex-digits

JSONNumber

int

int frac

int exp

int frac exp

int

digit

digit1-9 digits

- digit

- digit1-9 digits

frac

. digits

exp

e digits

digits

digit

digit digits

e

e

e+

e-

E

E+

E-